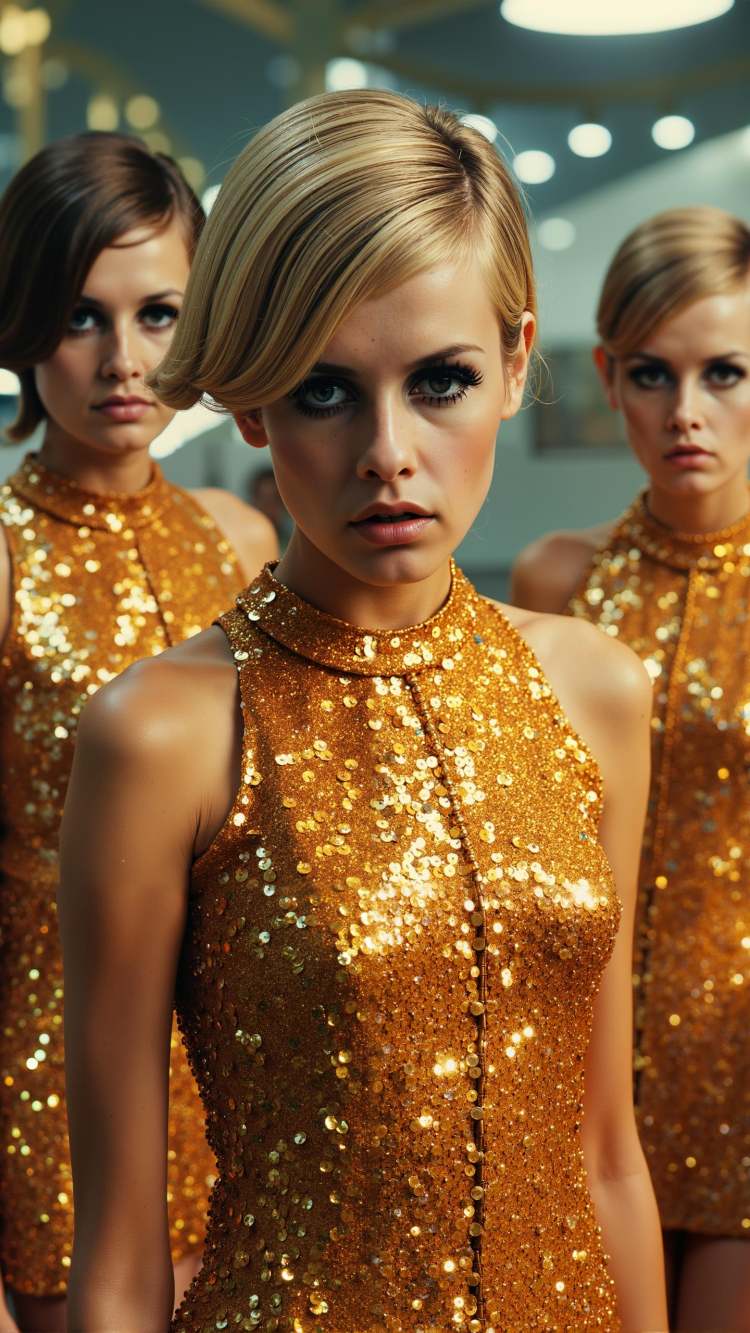

The LoRa was trained on just two single transformer blocks with a rank of 32, which allows for such a small file size to be achieved without any loss of quality.

Since the LoRa is applied to only two blocks, it is less prone to bleeding effects. Many thanks to 42Lux for their support.

Twiggy [2.3MB Single Transformer F LoRa] - v1.0

Twiggy [2.3MB Single Transformer F LoRa]

LORA

Version Detail

基础模型 F.1

Project Permissions

Use in 吐司 Online

As a online training base model on 吐司

Use without crediting me

Share merges of this model

Use different permissions on merges

Use Permissions

Sell generated contents

Use on generation services

Sell this model or merges